Publishing a newly created Git branch to a remote repository can be easier than you might expect.

Introduction

It is a very often situation in various Git workflow models to create a new branch and push (publish) it to a remote repository. Majority of people creates a lot of new branches. Just to initialize a pull (merge) request, to show code to remote workmates or just to backup local changes overnight.

Unfortunately, it is not as easy in Git as it could be:

~/C/my-fancy-project (master|✓) $ git checkout -b featureX

Switched to a new branch 'featureX'

~/C/my-fancy-project (featureX|✓) $ git push

fatal: The current branch featureX has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin featureX

Hmm, just copy-paste the given line and you are set:

~/C/my-fancy-project (featureX|✓) $ git push --set-upstream origin featureX Total 0 (delta 0), reused 0 (delta 0) To /tmp/my-fancy-project-remote/ * [new branch] featureX -> featureX Branch 'featureX' set up to track remote branch 'featureX' from 'origin'.

Of course you may memorize it after some time (however, I observe that many people do not) or even use the shorter syntax:

~/C/my-fancy-project (featureX|✓) $ git push -u origin featureX Total 0 (delta 0), reused 0 (delta 0) To /tmp/my-fancy-project-remote/ * [new branch] featureX -> featureX Branch 'featureX' set up to track remote branch 'featureX' from 'origin'.

Nonetheless, for me it was to many characters to type, especially repeated multiple times, especially in a typical workflow with one remote repository (usually named origin).

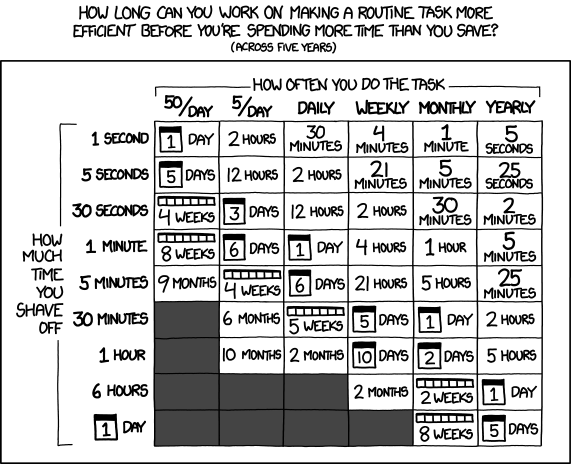

xkcd – Is It Worth the Time? – https://xkcd.com/1205/

Solution

The perfect solution for me would be just one command. Something like git publish.

~/C/my-fancy-project (master|✓) $ git checkout -b featureY Switched to a new branch 'featureY' ~/C/my-fancy-project (featureY|✓) $ git publish Total 0 (delta 0), reused 0 (delta 0) To /tmp/my-fancy-project-remote/ * [new branch] featureY -> featureY Branch 'featureY' set up to track remote branch 'featureY' from 'origin'.

Would not it be nice?

As you may know from my previous posts, I am a big enthusiast of comprehensive automation (such as CI/CD) or at least semi-automation (aka “making things easier”) when the previous is not possible (or viable). Therefore, at the time, I started looking at possible improvements. Git is written by developers for developers and offers different ways of customization. The easiest is write an alias. In that case is as simple as adding to ~/.gitconfig:

[alias]

# Gets the current branch name - useful in other commands

# Git 2.22 (June 2019) introduced "git branch --show-current"

branch-name = "!git rev-parse --abbrev-ref HEAD"

# Pushes the current branch to the remote "origin" (or the remote passed as the parameter)

# and set it to track the upstream branch

publish = "!sh -c 'git push -u ${1:-origin} $(git branch-name)' -"

As a result in addition to the basic case (seting an upstream branch to origin (if needed) and pushing branches from the current branch to origin):

$ git publish

it is also possible to do publish to some other remote repository:

$ git publish myOtherRemote

Cleaning up

As a counterpart to git publish, it is easy to implement git unpublish:

[alias]

# Deletes the remote version of the current branch from the remote "origin"

# (or the remote passed as the parameter)

unpublish = "!sh -c 'git push ${1:-origin} :$(git branch-name) && git branch --unset-upstream $(git branch-name)' -"

to be remove the current branch from a remote repository (origin or passed as the second parameter):

~/C/my-fancy-project (featureNoLongerNeeded|✓) $ git unpublish To /tmp/my-fancy-project-remote/ - [deleted] featureNoLongerNeeded

instead of:

~/C/my-fancy-project (featureNoLongerNeeded|✓) $ git push origin --delete featureNoLongerNeeded To /tmp/my-fancy-project-remote/ - [deleted] featureNoLongerNeeded

or

~/C/my-fancy-project (featureNoLongerNeeded|✓) $ git push origin :featureNoLongerNeeded To /tmp/my-fancy-project-remote/ - [deleted] featureNoLongerNeeded

Again, shorter and easier to remember.

Partial built-in solution

As proposed by indispensable Łukasz Szczęsny, hassle-free pushing only (without pulling) can be also achieved with Git configuration itself. It may be sufficient having branches removed automatically after a PR is merged (e.g. in properly configured GitLab or GitHub). In that case it is required to set pull.default configuration parameter to current:

~/C/my-fancy-project (master|✓) $ git checkout -b featureZ

Switched to a new branch 'featureZ'

~/C/my-fancy-project (featureZ|✓) $ git push -u

fatal: The current branch featureX has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin featureZ

~/C/my-fancy-project (featureZ|✓) $ git config --global push.default current

~/C/my-fancy-project (featureZ|✓) $ git push -u

Total 0 (delta 0), reused 0 (delta 0)

To /tmp/my-fancy-project-remote/

* [new branch] featureZ -> featureZ

Branch 'featureZ' set up to track remote branch 'featureZ' from 'origin'.

~/C/my-fancy-project (featureZ|✓) $ git pull

Already up to date.

Please pay attention to the -u flag in git push -u. It is required to setup remote branch tracking. Without that git pull alone would not work.

Summary

I have been using git publish (and git unpublish) for many years and I really like it. Taking the opportunity of writing this Git Tricks blog series I decided to share it with others (felt in love in a command line :-) ). Remember, however, it is now a part GitKurka (or its uncensored upstream project) – a set of useful and productive tweaks and aliases for Git.

Btw, I do not conduct Git training anymore, but people wanting to develop their Git skills even more may consider an on-site course from Bottega (PL/EN), an online course by Maciej Aniserowicz (devstyle.pl) (PL) or a comprehensive Pro Git book (EN).

Update 20190910. Added partial built-in alternative solution suggested by Łukasz Szczęsny.

Update 20190913. Added missing “branch-name” alias. Pointed out by Paul in a comment.

The lead photo based on the Iva Balk‘s work published in Pixabay, Pixabay License.