Get know what you can expect from Spock 2.0 M2 (based on JUnit 5), how to migrate to it in Gradle and Maven, and why it is important to report spotted problems :).

Visit https://blog.solidsoft.pl/ to follow my new articles.

Important note. I definitely do not encourage you to migrate your real-life project to Spock 2.0 M1 for good! This is the first (pre-)release of 2.x with not finalized API, intended to gather user feedback related to internal Spock migration to JUnit Platform.

This blog post arose to try to encourage you to make a test migration of your projects to Spock 2.0, see what started to fail, fix it (if caused by your tests) or report it (if it is a regression in Spock itself). As a result – at the Spock side – it will be possible to improve the code base before Milestone 2. The benefit for you – in addition to contribution to the FOSS project :-) – will be awareness of required changes (kept in a side branch) and readiness to migration once Spock 2.0 is more mature.

I plan to update this blog post when the next Spock 2 versions are available.

Updated 2020-02-10 to cover Spock 2.0 M2 with a dedicated Groovy 3 support.

Powered by JUnit Platform

The main change in Spock 2.0 M1 is migration to JUnit 5 (precisely speaking to execute tests with JUnit Platform 1.5, part of JUnit 5 instead of the JUnit 4 runner API). This is very convenient as Spock tests should be automatically recognized and executed everywhere the JUnit Platform is supported (IDEs, build tools, quality tools, etc.). In addition, the features provided by the platform itself (such as parallel test execution) should be (eventually) available also for Spock.

Gradle

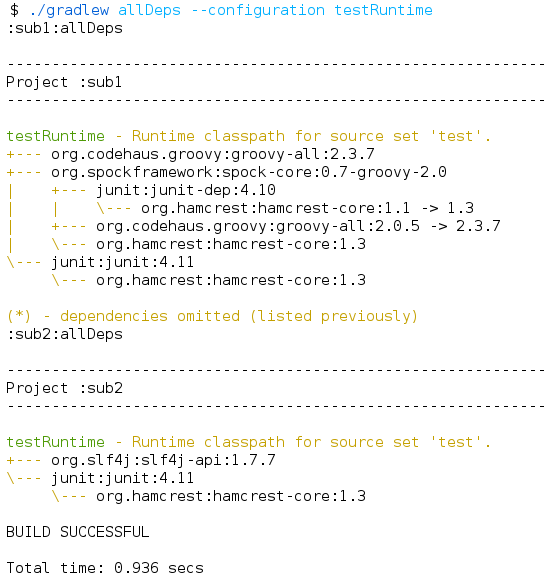

To bring Spock 2 to a Gradle project it is needed to bump the Spock version:

testImplementation('org.spockframework:spock-core:2.0-M2-groovy-2.5')

and activate tests execution by JUnit Platform:

test {

useJUnitPlatform()

}

Update 20200218. It is enough, but as Tomek Przybysz reminded in his comment, Gradle by default doesn’t fail if not tests are found. It may lead to a situation when after making that switch a build finished successfully, giving a false sense of security, while there are no tests executed at all.

It is a known issue in Gradle, not only limited to Spock. As a woraround the aforementioned configuration might be extended to:

test {

useJUnitPlatform()

afterSuite { desc, result ->

if (!desc.parent) {

if (result.testCount == 0) {

throw new IllegalStateException("No tests were found. Failing the build")

}

}

}

}

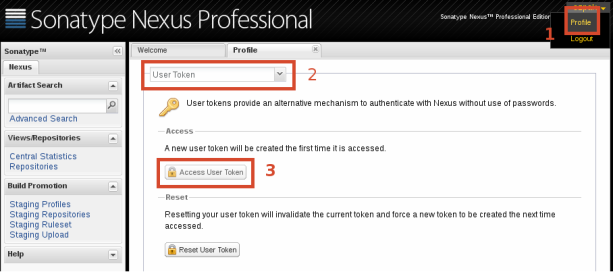

Maven

With Maven on the other hand, it is still required to switch to a never Spock version:

<dependency> <groupId>org.spockframework</groupId> <artifactId>spock-core</artifactId> <version>2.0-M2-groovy-2.5</version> <scope>test</scope> </dependency>

but that is all. The Surefire plugin (if you use version 3.0.0+) executes JUnit Platform tests by default, if junit-platform-engine (a transitive dependency of Spock 2) is found.

The minimal working project for Gradle i Maven is available from GitHub.

Other changes

Having such big change as migration to JUnit Platform, number of other changes in Spock 2.0 M1 is limited, to make finding a reason of potential regressions a little bit easier. As a side effects of the migration itself, the required Java version is 8.

In addition, all parameterized tests are (finally) “unrolled” automatically. That is great, however, currently there is no way to “roll” particular tests, as known from spock-global-unroll for Spock 1.x.

Some other changes (such as temporarily disabled SpockReportingExtension) can be found in the release notes.

More (possibly breaking) changes are expected to be merged into Milestone 2.

Issue with JUnit 4 rules

The tests using JUnit 4 @Rules (or @ClassRules) are expected to fail with an error message suggesting that requested objects were not created/initialized before a test (e.g. NullPointerException or IllegalStateException: the temporary folder has not yet been created) or were not verified/cleaned up after it (e.g. soft assertions from AssertJ). The Rules API is no longer supported by JUnit Platform. However, to make the migration easier (@TemporaryFolder is probably very often used in Spock-based integration tests), there is a dedicated spock-junit4 which internally wraps JUnit 4 rules into the Spock extensions and executes it in the Spock’s lifecycle. As it is implemented as a global extension, the only required thing to add is another dependency. In Gradle:

testImplementation 'org.spockframework:spock-junit4:2.0-M2-groovy-2.5'

or in Maven:

<dependency>

<groupId>org.spockframework</groupId>

<artifactId>spock-junit4</artifactId>

<version>2.0-M2-groovy-2.5</version>

<scope>test</scope>

</dependency>

That makes migration easier, but it is good to think about a switch to native Spock counterpart, if available/feasible.

Groovy 3 support

Updated 2020-02-10. The whole Groovy 3 section was added to cover changes in Spock 2.0 Milestone 2.

After my complains about runtime failure when Spock 2.0 M1 is used with Groovy 3.0, I rolled up my sleeves to check how hard would it be to provide that support. It took me some time, however, after after 23 (multiple times rebased) commits, constructive feedback from other Spock contributors and fruitful discussion with the Groovy developers, the support for Groovy 3 has been merged and is available as the main feature of Spock 2.0 M2, released right after Groovy 3.0.0 final.

To use Spock 2.0 M2 with Groovy 3 it is enough to just use the spock-*-2.0-M1 artifacts with the -groovy-3.0 suffix.

It is worth noting that Groovy 3 is not backward compatible with Groovy 2. To keep one Spock codebase, there is a layer of abstraction in Spock to allow to build (and use) the project with both Groovy 2 and 3. As a result, an extra artifact spock-groovy2-compat is (automatically) used in projects with Groovy 2. It is very important to do not mix the spock-*-2.x-groovy-2.5 artifacts with the groovy-*-3.x artifacts on a classpath. This may result in weird runtime errors.

I’m really happy that developers can immediately start testing the new Groovy 3.0.0 with Spock 2.0-M2 in their projects. In addition, as Spock is quite important (and low level) project in the Groovy ecosystem, it was nice to confirm that Groovy 3 works properly with it (and to report – along the way – a few minor detected issues to make Groovy even better ;-) ).

Other issues and limitations

Updated 2020-02-10. This section originally refereed to limitations of Spock 2.0 M1. Milestone 2 supports Groovy 3.

Spock 2.0 M1 is compiled and tested only with Groovy 2.5.8. As of M1, execution with Groovy 3.0 is currently blocked at runtime. Unfortunately, instead of a clear error message about incompatible Groovy version there is only a very cryptic error message:

Could not instantiate global transform class org.spockframework.compiler.SpockTransform specified at jar:file:/.../spock-core-2.0-M1-groovy-2.5.jar!/META-INF/services/org.codehaus.groovy.transform.ASTTransformation because of exception java.lang.reflect.InvocationTargetException

It is already reported and should be enhanced by M2.

Sadly, the limitation to Groovy 2.5 only, reduces potential feedback from people experimenting with Groovy 3 which is pretty close to a stable version (RC2 as of 2019/2020). It’s especially inconvenient as many Spock tests just work with Groovy 3 (of course there are some corner cases). There is a chance that Spock 2 before getting final will be adjusted to changes in Groovy 3 or at least the aforementioned hard limitation will be lifted. In the meantime, it is required to test Groovy 3 support with the snapshot version – 2.0-groovy-2.5-SNAPSHOT (which has that check disabled).

Summary

The action to do after reading this post is simple. Try to temporarily play with Spock 2.0 M2 in your projects and report any spotted issues, to help make Spock 2.0 even better :).