Get know how fast (or slow) mutation testing in fact is (based on tangible results from the real FOSS projects) and how can it be optimized (with real implementation in PIT, a tool for Java projects).

One of the questions I’ve got after my first presentation about mutation testing with PIT at DevConf.cz in Brno years ago was “how long would it take in my project?”. I wasn’t able to answer precisely. The execution time depends on multiple factors. Size of the project, number of tests, kind of tests (unit/integration), just to mention a few. There are also some optimization techniques which can speed up the analysis. To give you a roughly idea about that, I collected the results from a mutation testing session in two popular FOSS projects performed with PIT.

I assume that you already know something about mutation testing. If not, please read that article before going further.

Warm-up

As my guinea pigs I chose two popular open source projects – Assertj – which provides “fluent assertions for Java” and Joda-Time providing “a quality replacement for the Java date and time classes” which was a must for Java projects before Java 8 where similar solution became available out-of-box.

AssertJ can be named a medium size project according to FOSS standards. It has ~85K lines of production code and 150K lines of tests (as measured by Idea statistics plugin – including empty lines and comments). The code compilation takes ~2 seconds. Test execution ~12 seconds (almost 10_000 (mostly) unit tests). Joda-Time is somehow smaller with ~70K LOC in production and ~72K LOC in tests. Test execution takes ~3 seconds (~4200 tests).

I chose AssertJ and Joda-Time as they have a very solid test harness which consists (mostly) of unit tests – the best possible kind of tests for most of the cases. While integration tests can also be used with mutation testing (with some limitations and side effects) unit tests are the perfect candidate.

Brute force

Based on the further PIT analysis I estimated a number of possible to generate mutants at 8000 for AssertJ and 10000 for Joda-time. Of course it depends on the number of mutations available in your tool belt, however, to compare a brute force method with PIT the same number of mutants is accurate.

You may ask why there is greater number of mutants generated for Joda-time which has smaller codebase. It would be a fair question. PIT (or any other mutation testing tool) generates mutants in the lines where something can be “broken”. As Joda-time contains more “real logic” inside it was possible to generate more mutants than in AssertJ code where is a lot of delegation. In the other words, it’s usually possible to generate more mutants generated in a line with an if statement than in a line which just calls some void method in an another class. Therefore, number of mutations does not depend only on the number of lines.

Let’s get back to our calculations. AssertJ, brute force algorithm. For every mutation code has to be compiled – 8000 * 2 seconds and all tests have to be executed 8000 * 12 seconds. It gives 112000 seconds in total. It is ~1867 minutes, over 31 hours! Even for 5 times smaller Joda-time it is still: 10000 * 1 seconds + 10000 * 3 = 40000 seconds -> 667 minutes -> over 11 hours!

Wow, over 11 and 31 hours. For not so large projects. It is one of the reasons why mutation testing – first proposed by Richard Lipton in 1971 (over 40 years ago!) – had been an academic technique only. Let’s take a look how it could be optimized to make it conform to the reality in enterprise projects.

Optimizations

Test selection

Running all tests for every mutation it greatly ineffective. One of the naive approaches is an usage of a name convention – tests usually are named SomeProductionClass*Test. However, it tends to underestimate test suite effectiveness as no all tests are written in that way (especially integration/acceptance ones) and there could be also some typos. Another idea is a static call analysis. It’s more reliable, however, it completely skips reflection calls and in addition it can have a problem with polymorphism.

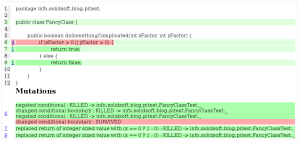

PIT in turns, leverages some other techniques. First of all, tests are executed in a “normal” mode and a standard code coverage metric is gathered. Thanks to that procedure, mutants with no “standard” coverage can be skipped. There is no chance to have them killed – no test even executes that given line. That technique can be especially beneficial if PIT is used in a project having small number of automated tests (low standard code coverage). What is more, PIT knows exactly which tests execute the particular line. Therefore, no test will ever be run against a mutant that it will not execute. This is essential to limit the number of tests executed per mutant. As a bonus, PIT (based on the first execution) is aware of tests execution time. Tests covering a given mutant can be reordered to have fast ones executed first. If a mutant has been killed, the subsequent (slower) tests can be skipped.

Those optimizations (among other technical solutions – such as creating mutations at the bytecode level instead of the source code one – which is must faster, albeit harder in implementation and also has some limitations) contributed to much faster mutation testing analysis in comparison to theoretical brute force mode.

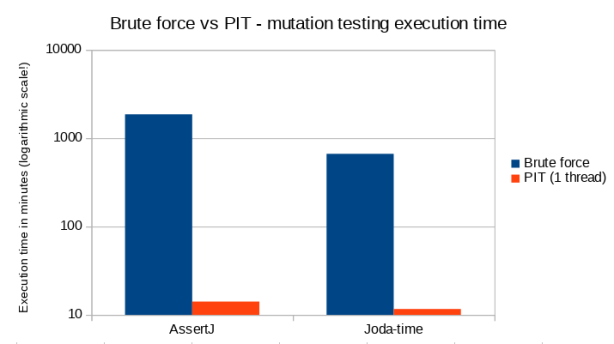

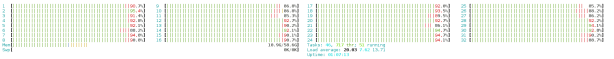

Let’s compare the theoretical brute force analysis time with the one achieved with vanilla PIT configuration.

| Execution time in minutes | Brute force | PIT (1 thread) |

|---|---|---|

| AssertJ | 1866.67 | 14.15 |

| Joda-time | 666.67 | 11.65 |

Looking at the chart please notice that Y-axis has a logarithmic scale (!). It’s ~14 minutes for AssertJ (as opposed to ~1867 minutes – over 31 hours) and ~11 minutes for Joda-time (compared to ~667 minutes – over 11 hours). It’s ~130 and ~60 times faster respectively. Looks good, but it can be done even better.

Parallel execution

One of the consequences of breaking the Moore’s law years ago was an increment of number of cores in modern CPUs. Nowadays, 4 or 8 virtual cores is a standard of a developer workstation. Servers can have much more of them. PIT advertised as “a state of the art in mutation testing” in addition to aforementioned optimizations supports parallel execution. Let’s take a look how much it can be gained in our tests case.

Test environment

All tests were performed using a dedicated m4.4xlarge AWS instance (32 virtual cores – 2x Intel(R) Xeon(R) CPU E5-2680 v2 @ 2.80GHz with 8 physical cores each – and 64GB RAM). Most of the executions were repeated to verify the achieved result, however, the methodology was far from one required in scientific researches (which was definitely not an aim of my work).

Raw results

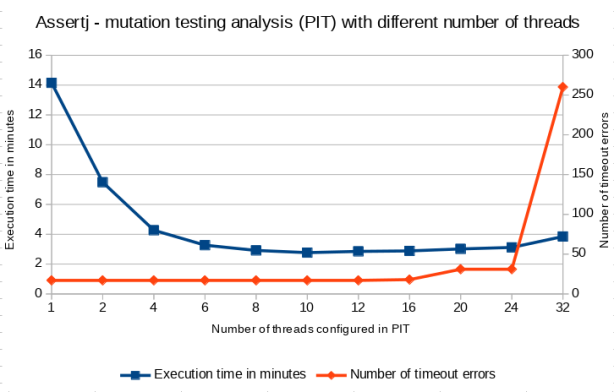

AssertJ

| Number of threads | 1 | 2 | 4 | 6 | 8 | 10 | 12 | 16 | 20 | 24 | 32 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Execution time in minutes | 14.15 | 7.48 | 4.27 | 3.27 | 2.92 | 2.77 | 2.85 | 2.88 | 3.02 | 3.12 | 3.85 |

| Number of timeout errors | 17 | 17 | 17 | 17 | 17 | 17 | 17 | 18 | 31 | 31 | 260 |

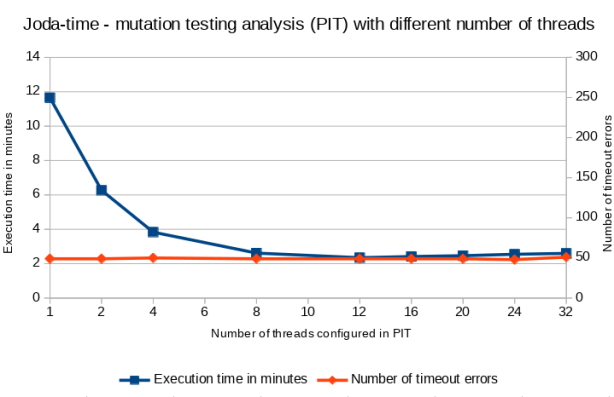

Joda-time

| Number of threads | 1 | 2 | 4 | 8 | 12 | 16 | 20 | 24 | 32 |

|---|---|---|---|---|---|---|---|---|---|

| Execution time in minutes | 11.65 | 6.27 | 3.83 | 2.62 | 2.35 | 2.42 | 2.47 | 2.55 | 2.60 |

| Number of timeout errors | 49 | 49 | 50 | 49 | 49 | 49 | 49 | 48 | 51 |

Commented results

The aforementioned results clearly shows that having quite powerful machine it was possible to the reduce mutation testing analysis execution time over 5 times (in comparison to sequential analysis) thanks to doing multiple things at the same time. In the test subjects the saturation point was placed around 10-12 threads (out of 32 virtual cores). At that state the machine seemed to be (almost) fully loaded (see the screenshot below) by most of the time (excluding the initial and the last stage of the analysis). After certain point the number of timeout errors was increased significantly (in a case of AssertJ) which could confirming that the machine was overloaded. Unfortunately, I don’t have a good explanation why the system was saturated much below 32 virtual cores (16 physical cores) as PIT should not use more threads as defined and there was no extra parallelism in the tests itself. As reasonably suggested by Henry Coles, the author of PIT, next time I will attach a profiler to the analysis to try to find potential places to speed the things up even further.

Further optimizations

Having mutation testing performed regularly in large codebase, the amount of changed code is usually meaningless. To not to have to re-execute mutation on all the code, PIT incorporates the incremental mode. When enabled, PIT determines which mutant could have got a chance to get killed by the recent changes in the production code and tests. As a result, the analysis scope can be limited to those classes. This approach is perfect for running a local analysis by a developer on his/her workstation or in a change/pull request to determine the “quality” of the recently introduced changes. For large codebase savings on time execution can be tremendously. It is only worth to remember that the heuristic selecting which part of the analysis should be executed has some limitation and from time to time it is good to run the full analysis anyway.

Summary

With a few smart optimizations (here implemented in PIT) it is possible to reduce (a theoretical) brute force mutation testing execution time by even ~130 times (from 31 hours to less than 15 minutes in a case of AssertJ). Having a hi-end quad core laptop it is possible to cut another chunk thanks to parallel execution. However, only the power of very strong server machine (here a server with 32 virtual cores which is not uncommon among CI server executors used in large projects) allows to unleash the power of PIT to speed up the mutation testing analysis. The full analysis time was possible to reduce by 3 orders of magnitude (!) (from 31 hours to less than 3 minutes in a case of AssertJ). It, together with incremental analysis, can make mutation testing feasible* to use on a daily basis in the real life, large enterprise projects (especially with unit tests posed the majority of the tests used in a project – but it is a topic for an another blog post :) ).

Btw, what is your experience in using mutation testing in non-academic projects? Leave your comment below.

Self promotion. Would you like to improve your and your team testing skills and knowledge of Spock/JUnit/Mockito/AssertJ/PIT quickly and efficiently? I conduct a condensed (unit) testing training which you may find useful.