Learn how leverage Spock 1.2 to slice a Spring context of a legacy application writing integration tests.

Visit https://blog.solidsoft.pl/ to follow my new articles.

Have you ever wanted, having some legacy application which you were starting to work on, to write some tests to get know what is going on and possibly be notified about regressions? That feeling when you want to instantiate a single class and it fails with NullPointerException. 6 replaced (with difficulty) dependencies later there are still some errors from the classes that you haven’t heard about before. Sounds familiar?

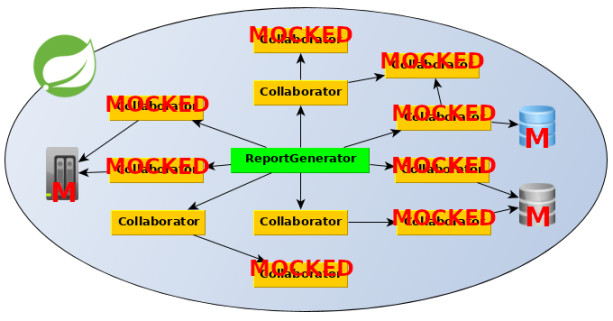

There are different techniques to deal with hidden dependencies. There is the whole dedicated book about that (and probably a few other that I haven’t read). Occasionally, it may be feasible to start with the integration tests and run through some process. It may be even more “entertaining” to see what exotic components are required to just setup the context, even if they are completely not needed in our case. Thank you (too wide and carelessly used) @ComponentScan :).

Injecting stubs/mocks inside the test context is a way to go as an emergency assistance (see the last paragraph, there are better, yet harder approaches). It can be achieved “manually” with an extra bean definition with the @Primary annotation (usually a reason to think twice before doing that) for every dependency at which level we want to make a cut of (or for every unneeded bean which is instantiated by the way). @MockBean placed on a field in a test is more handy, but still, it is needed to define a field in our tests and put the annotation on it (5? 10? 15 beans?). Spock 1.2 introduces somehow less know feature which may be useful here – @StubBeans.

It can be used to simply provide a list of classes which (possible) instances should be replaced with stubs in the Spring test context. Of course before the real objects are being instantiated (to prevent for example NPE in a constructor). Thanks to that up to several lines of stubbing/mock injections:

@RunWith(SpringRunner.class) //Spring Boot + Mockito

@SpringBootTest //possibly some Spring configuration with @ComponentScan is imported in this legacy application

public class BasicPathReportGeneratorInLegacyApplicationITTest { //usual approach

@MockBean

private KafkaClient kafkaClientMock;

@MockBean

private FancySelfieEnhancer fancySelfieEnhancerMock;

@MockBean

private FastTwitterSubscriber fastTwitterSubscriberMock;

@MockBean

private WaterCoolerWaterLevelAterter waterCoolerWaterLevelAterterMock;

@MockBean

private NsaSilentNotifier nsaSilentNotifierMock;

//a few more - remember, this is legacy application, genuine since 1999 ;)

//...

@Autowired

private ReportGenerator reportGenerator;

@Test

public void shouldGenerateEmptyReportForEmptyInputData() {

...

}

}

can be replaced with just one (long) line:

@SpringBootTest //possibly some Spring configuration with @ComponentScan is imported in this legacy application

@StubBeans([KafkaClient, FancySelfieEnhancer, FastTwitterSubscriber, WaterCoolerWaterLevelAterter, NsaSilentNotifier/(, ... */])

//all classes of real beans which should be replaced with stubs

class BasicPathReportGeneratorInLegacyApplicationITSpec extends Specification {

@Autowired

private ReportGenerator reportGenerator

def "should generate empty report for empty input data"() {

....

}

}

(tested with Spock 1.2-RC2)

It’s worth to mention that @StubBeans is intended just to provide placeholders. In a situation it is required to provide stubbing and/or an invocation verification @SpringBean or @SpringSpy (also introduced in Spock 1.2) are better. I wrote more about it in my previous blog post.

There is one important aspect to emphasize. @StubBeans are handy to be used in a situation when we have some “legacy” project and want to start writing integration regression tests quickly to see the results. However, as a colleague of mine Darek Kaczyński brightly summarized, blindly replacing beans which “explode” in tests is just “sweeping problems under carpet”. After the initial phase, when we are starting to understand what is going on, it is a good moment to rethink the way the context – both in production and in tests – is created. The already mentioned too wide @ComponentScan is very often the root of all evil. An ability to setup a partial context and put it together (if needed) is a good place to start. Using @Profile or conditional beans are the very powerful mechanisms in tests (and not only there). @TestConfiguration and proper bean selection to improve context caching are something worth to keep in your mind. However, I started this article to present the new mechanism in Spock which might be useful in some cases and I want to keep it short. There could be an another, more generic blog post just about managing the Spring context in the integration tests. I have to seriously thing about it :).